In this blog, you’ll learn about the key role played by multi-camera synchronization, how it works, and examples of autonomous vehicles using this path-breaking technology.

Why Multi-Camera Synchronization is a Key Feature in Cameras that Enable Autonomous Mobility

Why Multi-Camera Synchronization is a Key Feature in Cameras that Enable Autonomous Mobility

Article from | e-con Systems

Embedded vision is a fundamental component of autonomous mobility systems, ensuring that they achieve undeniable levels of accuracy, reliability, and safety. Hence, it is no surprise that more autonomous robots and vehicles are getting equipped with high-resolution cameras. When paired with advanced processors, these cameras help the robots navigate and interact with their environments effectively.

The selection of these cameras is based on specific criteria such as sensor type, resolution, and frame rate. The primary function of these systems is to capture, process, and analyze visual data for tasks like object recognition, obstacle detection, and navigation. At the core of this autonomous innovation lies multi-camera synchronization.

In this blog, you’ll learn about the key role played by multi-camera synchronization, how it works, and examples of autonomous vehicles using this path-breaking technology.

Understanding Multi-Camera Synchronization

Strategic placement

As you can imagine, camera placement is critical in autonomous vehicles. Cameras are installed at predetermined points around the vehicle, selected based on the vehicle’s design and the intended use case. This arrangement ensures comprehensive coverage, enabling the system to gather visual data from all angles, providing a complete 360-degree field of view.

The strategic placement also takes into account the field of view of each camera, ensuring minimal overlap and maximum coverage. This is especially demanding in complex environments where capturing spatial data is the backbone of navigation and obstacle avoidance.

Synchronization

Synchronization in multi-camera systems is a complex process that ensures all cameras operate in unison, capturing images at precisely the same moment. This synchronization is achieved through a combination of hardware and software mechanisms.

Hardware synchronization often involves using a common clock signal to trigger image capture simultaneously. Software synchronization may involve time-stamping each image frame and aligning them in post-processing. This precise synchronization is essential for creating a cohesive and accurate representation of the vehicle’s surroundings, which is necessary for real-time decision-making and navigation.

Applications of Multi-Camera Synchronization in ADAS

360-degree surround-view

Multi-camera synchronization creates a 360-degree surround-view, enhancing driver awareness and vehicle safety. This system integrates images from multiple cameras positioned around the vehicle to provide a bird’s-eye view of the surrounding area. It is invaluable in various driving scenarios, such as parking, navigating tight spaces, or maneuvering in busy traffic.

Image stitching

Image stitching is a direct application of multi-camera synchronization, where images from different cameras are combined to create a single, coherent visual. This is useful in providing extended lateral and rear views, which are required for performing tasks like safe lane changes and merging. The synchronized cameras capture overlapping fields of view, which are then digitally stitched together, ensuring smooth transitions.

Remote driving assistance

Multi-camera synchronization enhances remote driving assistance systems, where an operator remotely controls or assists the vehicle. The system can provide the operator with a clear visual representation of the vehicle’s surroundings. This is crucial for remote decision-making in unforeseen driving conditions. The operator, with access to real-time visual information, can better assess situations and provide accurate guidance.

Benefits of multi-camera synchronization in autonomous mobility

Situational awareness

Multi-camera synchronization provides autonomous vehicles with a comprehensive and cohesive view of their surroundings. This synchronized 360-degree coverage ensures that the vehicle is aware of its environment from all angles, significantly reducing blind spots and enhancing situational awareness.

Accurate spatial perception/ depth estimation

The ability to accurately gauge distances and understand the objects’ spatial relationships is a key advantage. Autonomous systems analyze images from multiple synchronized cameras to better estimate depth and perceive the 3D structure of the environment. It is required for tasks like safe lane navigation, obstacle avoidance, and precise parking maneuvers.

Reduced blind spots and false alarms

Multi-camera synchronization minimizes blind spots and reduces false alarms by strategically positioning and synchronizing multiple cameras around the vehicle to create a comprehensive 360-degree view. This view ensures that potential hazards are identified accurately from all angles, reducing the likelihood of false alarms. Synchronized multi-camera systems also provide real-time information to enable these systems to make informed decisions.

Precise mapping and localization

Each camera captures different aspects and angles of the environment, and when these perspectives are synchronized, they create a detailed spatial map. This helps the system to understand its surroundings accurately. Furthermore, the synchronized data from multiple cameras ensures accurate localization, enabling the vehicle to pinpoint its exact position within the map. It can be important for path planning and maneuvering, especially in complex environments.

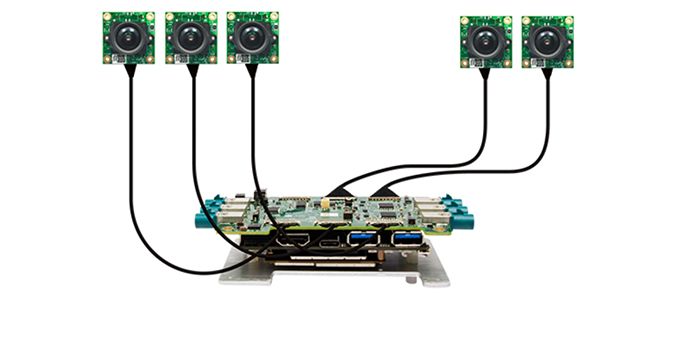

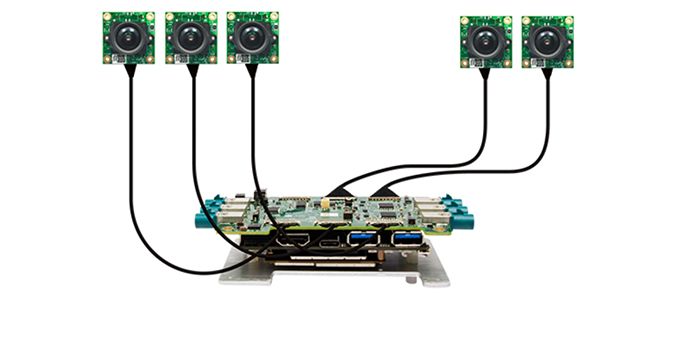

e-con Systems’ multi-camera solutions for autonomous systems

e-con Systems, having been designing, developing, and manufacturing OEM cameras for 20+ years, has a strong track record of multi-camera system integration in various industries. Our unique advantage lies in our partnerships with leading sensor manufacturers like Sony, onsemi, and OmniVision, and as a key partner of NVIDIA, we deliver advanced technology solutions.

We specialize in streamlining camera evaluations, offering a range of multi-camera solutions for 2 to 8 camera systems with in-depth customization capabilities to meet specific needs.

Visit our Multi-Camera Solutions page or use our Camera Selector to explore our full portfolio.

About Suresh Madhu

About Suresh Madhu

Suresh Madhu is the product marketing manager with 16+ years of experience in embedded product design, technical architecture, SOM product design, camera solutions, and product development. He has played an integral part in helping many customers build their products by integrating the right vision technology into them.

The content & opinions in this article are the author’s and do not necessarily represent the views of AgriTechTomorrow

e-con Systems

e-con Systems has been a pioneer in the embedded vision space; designing, developing and manufacturing custom and off-the-shelf camera solutions since 2003. With a team of 300+ extremely skilled core engineers, our products are currently embedded in over 350 customer products. So far, we have shipped over 2 million cameras to the United States, Europe, Japan, South Korea and many more countries. Our cameras are suitable for applications such as autonomous mobile robots, smart agricultural devices, medical diagnostic systems, smart checkouts/carts, sports broadcasting systems, industrial handhelds, drones, biometric systems, etc.

Other Articles

What Causes Blooming Artifacts in Microscopic Imaging and How to Prevent Them

Optical Zoom vs. Digital Zoom: See What Best Suits Your Embedded Vision Application

Enhancing Precision Agriculture with 20MP High-Resolution Cameras for Weed and Bug Detection

More about e-con Systems

Comments (0)

This post does not have any comments. Be the first to leave a comment below.

Featured Product